SparkSqlAstBuilder

SparkSqlAstBuilder is an AstBuilder that converts SQL statements into Catalyst expressions, logical plans or table identifiers (using visit callback methods).

|

Note

|

Spark SQL uses ANTLR parser generator for parsing structured text. |

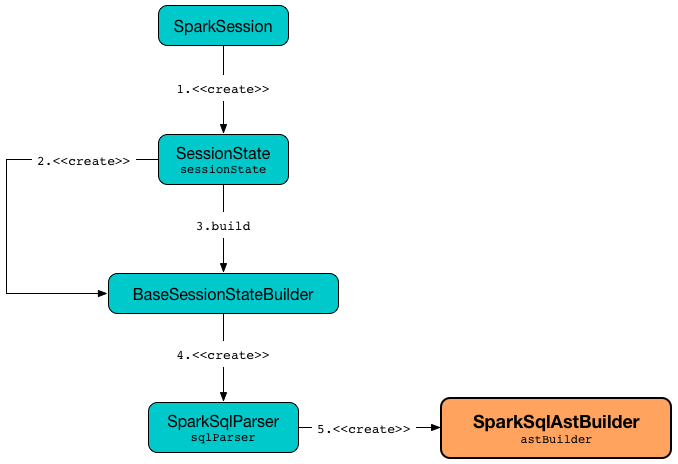

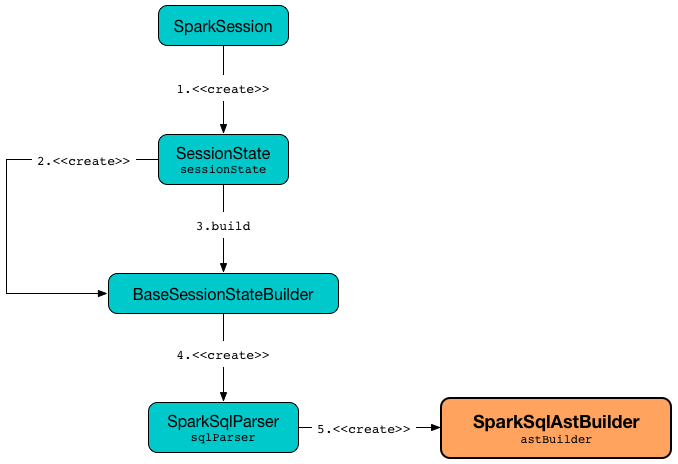

SparkSqlAstBuilder is created when SparkSqlParser is created (which is when SparkSession is requested for the lazily-created SessionState).

scala> :type spark.sessionState.sqlParser

org.apache.spark.sql.catalyst.parser.ParserInterface

import org.apache.spark.sql.execution.SparkSqlParser

val sqlParser = spark.sessionState.sqlParser.asInstanceOf[SparkSqlParser]

scala> :type sqlParser.astBuilder

org.apache.spark.sql.execution.SparkSqlAstBuilderSparkSqlAstBuilder takes a SQLConf when created.

|

Note

|

|

| Callback Method | ANTLR rule / labeled alternative | Spark SQL Entity | ||

|---|---|---|---|---|

|

|

|

||

|

|

|||

|

|

|

||

|

|

|

||

|

|

|

||

|

|

CreateViewCommand for |

||

|

|

|

||

|

|

|

||

|

|

|||

|

|

ShowCreateTableCommand logical command for |

||

|

|

TruncateTableCommand logical command for |

| Parsing Handler | LogicalPlan Added |

|---|---|