HiveExternalCatalog — Hive-Aware Metastore of Permanent Relational Entities

HiveExternalCatalog is an external catalog of permanent relational entities (metastore).

HiveExternalCatalog is used for SparkSession with Hive support enabled.

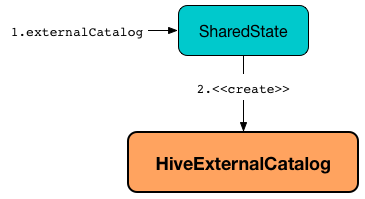

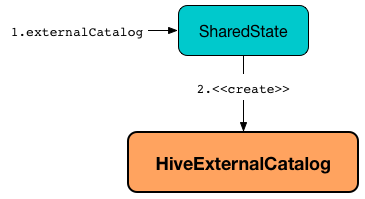

HiveExternalCatalog is created when SharedState is requested for the ExternalCatalog (and spark.sql.catalogImplementation internal configuration property is hive).

|

Note

|

The Hadoop configuration to create a HiveExternalCatalog is the default Hadoop configuration from Spark Core’s SparkContext.hadoopConfiguration with the Spark properties with spark.hadoop prefix.

|

HiveExternalCatalog uses an HiveClient to interact with a Hive metastore.

import org.apache.spark.sql.internal.StaticSQLConf

val catalogType = spark.conf.get(StaticSQLConf.CATALOG_IMPLEMENTATION.key)

scala> println(catalogType)

hive

// Alternatively...

scala> spark.sessionState.conf.getConf(StaticSQLConf.CATALOG_IMPLEMENTATION)

res1: String = hive

// Or you could use the property key by name

scala> spark.conf.get("spark.sql.catalogImplementation")

res1: String = hive

val metastore = spark.sharedState.externalCatalog

scala> :type metastore

org.apache.spark.sql.catalyst.catalog.ExternalCatalog

// Since Hive is enabled HiveExternalCatalog is the metastore

scala> println(metastore)

org.apache.spark.sql.hive.HiveExternalCatalog@25e95d04|

Tip

|

Use spark.sql.warehouse.dir Spark property to change the location of Hive’s Refer to SharedState to learn about (the low-level details of) Spark SQL support for Apache Hive. See also the official Hive Metastore Administration document. |

|

Tip

|

Enable Add the following line to Refer to Logging. |

Creating HiveExternalCatalog Instance

HiveExternalCatalog takes the following to be created:

-

Hadoop Configuration

HiveClient — client Lazy Property

client: HiveClientclient is a HiveClient to access a Hive metastore.

client is created lazily (when first requested) using HiveUtils utility (with the SparkConf and Hadoop Configuration).

|

Note

|

|

getRawTable Method

getRawTable(

db: String,

table: String): CatalogTablegetRawTable returns the CatalogTable metadata of the input table.

Internally, getRawTable requests the HiveClient for the table metadata from a Hive metastore.

|

Note

|

getRawTable is used when HiveExternalCatalog is requested to renameTable, alterTable, alterTableStats, getTable, alterPartitions and listPartitionsByFilter.

|

doAlterTableStats Method

doAlterTableStats(

db: String,

table: String,

stats: Option[CatalogStatistics]): Unit|

Note

|

doAlterTableStats is part of ExternalCatalog Contract to alter the statistics of a table.

|

doAlterTableStats…FIXME

listPartitionsByFilter Method

listPartitionsByFilter(

db: String,

table: String,

predicates: Seq[Expression],

defaultTimeZoneId: String): Seq[CatalogTablePartition]|

Note

|

listPartitionsByFilter is part of ExternalCatalog Contract to…FIXME.

|

listPartitionsByFilter…FIXME

alterPartitions Method

alterPartitions(

db: String,

table: String,

newParts: Seq[CatalogTablePartition]): Unit|

Note

|

alterPartitions is part of ExternalCatalog Contract to…FIXME.

|

alterPartitions…FIXME

getTable Method

getTable(

db: String,

table: String): CatalogTable|

Note

|

getTable is part of ExternalCatalog Contract to…FIXME.

|

getTable…FIXME

doAlterTable Method

doAlterTable(

tableDefinition: CatalogTable): Unit|

Note

|

doAlterTable is part of ExternalCatalog Contract to alter a table.

|

doAlterTable…FIXME

getPartition Method

getPartition(

db: String,

table: String,

spec: TablePartitionSpec): CatalogTablePartition|

Note

|

getPartition is part of ExternalCatalog Contract to…FIXME.

|

getPartition…FIXME

getPartitionOption Method

getPartitionOption(

db: String,

table: String,

spec: TablePartitionSpec): Option[CatalogTablePartition]|

Note

|

getPartitionOption is part of ExternalCatalog Contract to…FIXME.

|

getPartitionOption…FIXME

Retrieving CatalogTablePartition of Table — listPartitions Method

listPartitions(

db: String,

table: String,

partialSpec: Option[TablePartitionSpec] = None): Seq[CatalogTablePartition]|

Note

|

listPartitions is part of the ExternalCatalog Contract to list partitions of a table.

|

listPartitions…FIXME

doCreateTable Method

doCreateTable(

tableDefinition: CatalogTable,

ignoreIfExists: Boolean): Unit|

Note

|

doCreateTable is part of the ExternalCatalog Contract to…FIXME.

|

doCreateTable…FIXME

doAlterTableDataSchema Method

doAlterTableDataSchema(

db: String,

table: String,

newDataSchema: StructType): Unit|

Note

|

doAlterTableDataSchema is part of the ExternalCatalog Contract to…FIXME.

|

doAlterTableDataSchema…FIXME

createTable Method

createTable(

tableDefinition: CatalogTable,

ignoreIfExists: Boolean): Unit|

Note

|

createTable is part of the ExternalCatalog to…FIXME.

|

createTable…FIXME

createDataSourceTable Internal Method

createDataSourceTable(

table: CatalogTable,

ignoreIfExists: Boolean): UnitcreateDataSourceTable…FIXME

|

Note

|

createDataSourceTable is used when HiveExternalCatalog is requested to createTable.

|

saveTableIntoHive Internal Method

saveTableIntoHive(

tableDefinition: CatalogTable,

ignoreIfExists: Boolean): UnitsaveTableIntoHive…FIXME

|

Note

|

saveTableIntoHive is used when HiveExternalCatalog is requested to createDataSourceTable.

|

restoreTableMetadata Internal Method

restoreTableMetadata(

inputTable: CatalogTable): CatalogTablerestoreTableMetadata…FIXME

|

Note

|

|

tableMetaToTableProps Internal Method

tableMetaToTableProps(

table: CatalogTable): Map[String, String]

tableMetaToTableProps(

table: CatalogTable,

schema: StructType): Map[String, String]tableMetaToTableProps…FIXME

|

Note

|

tableMetaToTableProps is used when HiveExternalCatalog is requested to doAlterTableDataSchema and doCreateTable (and createDataSourceTable).

|

Restoring Data Source Table — restoreDataSourceTable Internal Method

restoreDataSourceTable(

table: CatalogTable,

provider: String): CatalogTablerestoreDataSourceTable…FIXME

|

Note

|

restoreDataSourceTable is used exclusively when HiveExternalCatalog is requested to restoreTableMetadata (for regular data source table with provider specified in table properties).

|

Restoring Hive Serde Table — restoreHiveSerdeTable Internal Method

restoreHiveSerdeTable(

table: CatalogTable): CatalogTablerestoreHiveSerdeTable…FIXME

|

Note

|

restoreHiveSerdeTable is used exclusively when HiveExternalCatalog is requested to restoreTableMetadata (when there is no provider specified in table properties, which means this is a Hive serde table).

|

getBucketSpecFromTableProperties Internal Method

getBucketSpecFromTableProperties(

metadata: CatalogTable): Option[BucketSpec]getBucketSpecFromTableProperties…FIXME

|

Note

|

getBucketSpecFromTableProperties is used when HiveExternalCatalog is requested to restoreHiveSerdeTable or restoreDataSourceTable.

|

Building Property Name for Column and Statistic Key — columnStatKeyPropName Internal Method

columnStatKeyPropName(

columnName: String,

statKey: String): StringcolumnStatKeyPropName builds a property name of the form spark.sql.statistics.colStats.[columnName].[statKey] for the input columnName and statKey.

|

Note

|

columnStatKeyPropName is used when HiveExternalCatalog is requested to statsToProperties and statsFromProperties.

|

Converting Table Statistics to Properties — statsToProperties Internal Method

statsToProperties(

stats: CatalogStatistics,

schema: StructType): Map[String, String]statsToProperties converts the table statistics to properties (i.e. key-value pairs that will be persisted as properties in the table metadata to a Hive metastore using the Hive client).

statsToProperties adds the following properties to the properties:

-

spark.sql.statistics.totalSize with total size (in bytes)

-

(if defined) spark.sql.statistics.numRows with number of rows

statsToProperties takes the column statistics and for every column (field) in schema converts the column statistics to properties and adds the properties (as column statistic property) to the properties.

|

Note

|

|

Restoring Table Statistics from Properties (from Hive Metastore) — statsFromProperties Internal Method

statsFromProperties(

properties: Map[String, String],

table: String,

schema: StructType): Option[CatalogStatistics]statsFromProperties collects statistics-related properties, i.e. the properties with their keys with spark.sql.statistics prefix.

statsFromProperties returns None if there are no keys with the spark.sql.statistics prefix in properties.

If there are keys with spark.sql.statistics prefix, statsFromProperties creates a ColumnStat that is the column statistics for every column in schema.

For every column name in schema statsFromProperties collects all the keys that start with spark.sql.statistics.colStats.[name] prefix (after having checked that the key spark.sql.statistics.colStats.[name].version exists that is a marker that the column statistics exist in the statistics properties) and converts them to a ColumnStat (for the column name).

In the end, statsFromProperties creates a CatalogStatistics with the following properties:

-

sizeInBytes as spark.sql.statistics.totalSize property

-

rowCount as spark.sql.statistics.numRows property

-

colStats as the collection of the column names and their

ColumnStat(calculated above)