HiveSessionCatalog — Hive-Specific Catalog of Relational Entities

HiveSessionCatalog is a session-scoped catalog of relational entities that is used when SparkSession was created with Hive support enabled.

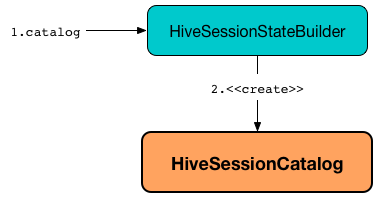

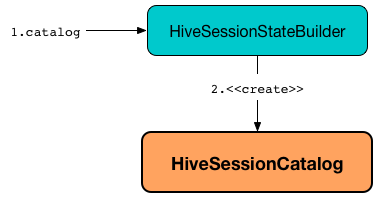

HiveSessionCatalog is available as catalog property of SessionState when SparkSession was created with Hive support enabled (that in the end sets spark.sql.catalogImplementation internal configuration property to hive).

import org.apache.spark.sql.internal.StaticSQLConf

val catalogType = spark.conf.get(StaticSQLConf.CATALOG_IMPLEMENTATION.key)

scala> println(catalogType)

hive

// You could also use the property key by name

scala> spark.conf.get("spark.sql.catalogImplementation")

res1: String = hive

// Since Hive is enabled HiveSessionCatalog is the implementation

scala> spark.sessionState.catalog

res2: org.apache.spark.sql.catalyst.catalog.SessionCatalog = org.apache.spark.sql.hive.HiveSessionCatalog@1ae3d0a8HiveSessionCatalog is created exclusively when HiveSessionStateBuilder is requested for the SessionCatalog.

HiveSessionCatalog uses the legacy HiveMetastoreCatalog (which is another session-scoped catalog of relational entities) exclusively to allow RelationConversions logical evaluation rule to convert Hive metastore relations to data source relations when executed.

Creating HiveSessionCatalog Instance

HiveSessionCatalog takes the following to be created:

-

Legacy HiveMetastoreCatalog

-

Hadoop Configuration