csv(path: String): DataFrameDataStreamReader — Loading Data from Streaming Source

DataStreamReader is the interface to describe how data is loaded to a streaming Dataset from a streaming source.

| Method | Description | ||

|---|---|---|---|

Sets |

|||

|

Specifies the format of the data source The format is used internally as the name (alias) of the streaming source to use to load the data |

||

Sets |

|||

Creates a streaming |

|||

Sets a loading option |

|||

|

Specifies the configuration options of a data source

|

||

Sets |

|||

Sets |

|||

|

Specifies the user-defined schema of the streaming data source (as a |

||

Sets |

|||

|

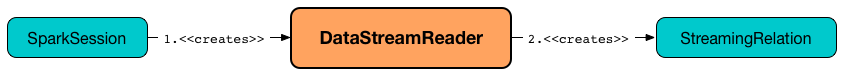

DataStreamReader is used for a Spark developer to describe how Spark Structured Streaming loads datasets from a streaming source (that in the end creates a logical plan for a streaming query).

|

Note

|

DataStreamReader is the Spark developer-friendly API to create a StreamingRelation logical operator (that represents a streaming source in a logical plan).

|

You can access DataStreamReader using SparkSession.readStream method.

import org.apache.spark.sql.SparkSession

val spark: SparkSession = ...

val streamReader = spark.readStreamDataStreamReader supports many source formats natively and offers the interface to define custom formats:

|

Note

|

DataStreamReader assumes parquet file format by default that you can change using spark.sql.sources.default property.

|

|

Note

|

hive source format is not supported.

|

After you have described the streaming pipeline to read datasets from an external streaming data source, you eventually trigger the loading using format-agnostic load or format-specific (e.g. json, csv) operators.

| Name | Initial Value | Description |

|---|---|---|

|

Source format of datasets in a streaming data source |

|

(empty) |

Optional user-defined schema |

|

(empty) |

Collection of key-value configuration options |

Specifying Loading Options — option Method

option(key: String, value: String): DataStreamReader

option(key: String, value: Boolean): DataStreamReader

option(key: String, value: Long): DataStreamReader

option(key: String, value: Double): DataStreamReaderoption family of methods specifies additional options to a streaming data source.

There is support for values of String, Boolean, Long, and Double types for user convenience, and internally are converted to String type.

Internally, option sets extraOptions internal property.

|

Note

|

You can also set options in bulk using options method. You have to do the type conversion yourself, though. |

Creating Streaming Dataset (to Represent Loading Data From Streaming Source) — load Method

load(): DataFrame

load(path: String): DataFrame (1)-

Specifies

pathoption before passing the call to parameterlessload()

load…FIXME

Built-in Formats

json(path: String): DataFrame

csv(path: String): DataFrame

parquet(path: String): DataFrame

text(path: String): DataFrame

textFile(path: String): Dataset[String] (1)-

Returns

Dataset[String]notDataFrame

DataStreamReader can load streaming datasets from data sources of the following formats:

-

json -

csv -

parquet -

text

The methods simply pass calls to format followed by load(path).